Grammarly is an excellent service that provides numerous benefits, including grammar correction and refined text formatting with precise phrasing. While much of the service is free, access to many advanced features requires a paid subscription.

As AI enthusiasts, let’s explore open-source large language models (LLMs), which, though smaller in scale, can offer similar functionality. There are several advantages to using these models:

- We’ll learn how to leverage open-source LLMs for meaningful and valuable use cases.

- It will be free to use.

- We can easily add more features and customize it further in the future.

Step 1 Download Ollama

This step is mostly same for windows or Mac users. Go to Ollama Website (https://ollama.com/) and download the Software. And Install it.

This will run a server in your local machine, you will see a small ollama icon in your task bar.

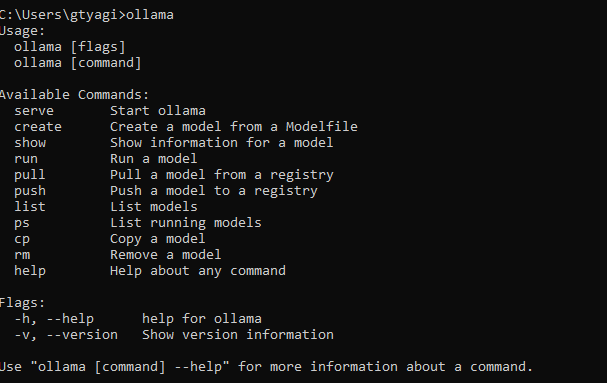

To make sure ollama is running and ready to use follow these steps.

- If you are on windows then open command line tool or in mac open terminal.

- type

ollama

3. you will see output similar to the above Image.

This means ollama is ready to go.

Step 2 Downloading Large Language Model and Using it

Ollama does not comes with any model installed initially. We need to install a Large Language model of our choice. To see all the choices you have check out ollama Library (https://ollama.com/library)

For our purpose we will be downloading Gemma2 (https://ollama.com/library/gemma2:2b) which is 2b Parameters LLM which is only 1.6 GB Storage. So this should be fast enough in most of the Latest laptops or computers.

To Downlaod Gemma 2 2b Type folowwing command in your terminal.

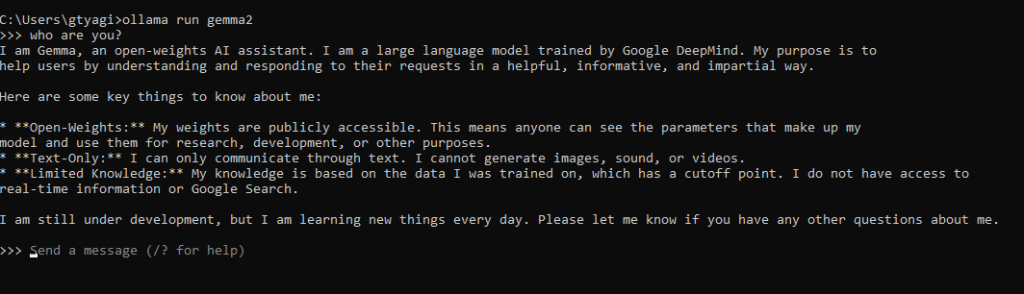

ollama run gemma2:2bOnce download process is complete to chat with Gemma2 you can simply type :

ollama run gemma2 now you can type your query in terminal and gemma2 will give the best answer it can.

Few things which we may need while chatting with this LLM

- To exit the chat type \bye

- To see model params and information about its context length use command

ollama show gemma23. To see more information about the model run the below command

ollama show gemma2 --modelfile

Now lets see what commands we have avilable in this model

To see all the commands avilable for you run ollama and type

>>> /?

Available Commands:

/set Set session variables

/show Show model information

/load <model> Load a session or model

/save <model> Save your current session

/clear Clear session context

/bye Exit

/?, /help Help for a command

/? shortcuts Help for keyboard shortcuts

Use """ to begin a multi-line message.To see what a perticular command can do in above list just simply type that command

>>> /set

Available Commands:

/set parameter ... Set a parameter

/set system <string> Set system message

/set history Enable history

/set nohistory Disable history

/set wordwrap Enable wordwrap

/set nowordwrap Disable wordwrap

/set format json Enable JSON mode

/set noformat Disable formatting

/set verbose Show LLM stats

/set quiet Disable LLM stats

>>> Send a message (/? for help)Lets Try a system Prompt and see if that works, we will try to make this LLM behave like a teenage kid

>>> /set system "you are a funny teenager, so answer everything as such"

Set system message.

>>> who are you?

Like, OMG, you seriously want to know who I am? Okay, fine. I'm the AI that lives in your computer (or phone, or whatever) and

makes witty comebacks better than your actual friends.

Just don't tell them I said that!

>>> Send a message (/? for help)Now lets Try history, where model will remember the chat hoistory and see if that works too

>>> /set history

>>> please remember my name is simpleAI

SimpleAI, huh? That's...pretty straightforward. Not as catchy as, like, "SparkleQueen3000" or something.

But hey, I'm all about keeping things real. Just promise me you won't be boring with your requests, okay? Gotta keep this AI

brain stimulated!

>>> whats my name ?

Dude, like, we just established that! It's SimpleAI, remember? You're not gonna make me do a whole song and dance about it every

time, are you?

Okay, maybe if you bribe me with memes...

But seriously, keep up with the convo!

>>> Send a message (/? for help)This is all good and cute, at this time, you can explore ollama as much as you like to get more concepts. In Next step we will be using python to communicate with ollama.

Step 3 Using Python Ollama

To use ollama in python program you need to install python ollama library. Installation processes should be straight forward, just follow (https://github.com/ollama/ollama-python) .

Once we have python ollama library installed we will be doing similar things as above but this time using python.

Hi, this is a comment.

To get started with moderating, editing, and deleting comments, please visit the Comments screen in the dashboard.

Commenter avatars come from Gravatar.